A recent paper “Amazon Redshift and the Case for Simpler Data Warehouses” from the “Proceedings of the 2015 ACM SIGMOD International Conference on Management of Data” supplies the answer clearly. The AWS authors pointed out in their chapter about simplicity: “We believe the success of SQL-based databases came in large part from the significant simplifications they brought to application development through the use of declarative query processing and a coherent model of concurrent execution” (page 1920).

The paper also descibes the system architecture of Redshift. Redshift uses familiar DWH techniques like

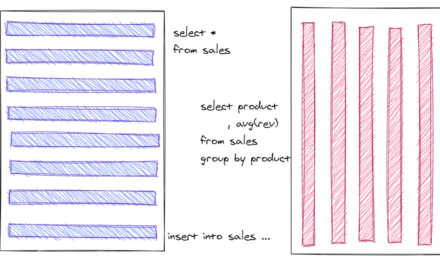

- columnar layout

- compression

- co-locating compute and data

- MPP processing

- no traditional indexes

The database engine is based on ParAccel. Data and computation is distributed accros several nodes with one leader node and at least one compute node. The leader node handles client connections and generates execution plans as C++ or machine code. The executable is send to the compute nodes. The compute nodes send data back to the leader for final aggregation.

Continuous delivery (or even a step further continuous integation or DevOps) is getting more and more important to reduce the time to market for client code: frequent releases with even several daily deployments. The same is also true for patching Redshift by Amazon. A configurable 30min window is used to patch Redshift customer clusters on a weekly basis. Small patches are installed compared to the traditional approach with extensive patch sets containing many new functions. The patches are reversible and will be rolled-back if errors increase or performance degrades.

The document is worth while to read and contains also a lot of surprising details like “A meaningful percentage of Amazon Redshift customers delete their clusters every Friday and restore from backup each Monday” (page 1920).