The article has three main sections:

- setup of the data in the staging tables and the dbt models / snapshots

- data flow execution with initial (full) and delta load including materializations with dbt

- result discussion for view, table, incremental, and snapshot materialization

Setup

There are two staging tables stg_place_pst and stg_place_trs. Both tables represent the staging area. The tables contain the same data at the beginning but differ in handling new or changed data:

- stg_customer_pst: new or changed data is inserted as additional rows. The staging table contains all data from the beginning (persistent staging table).

- stg_customer_trs: old data is truncated before new or changed data is inserted as additional rows. The staging table contains the latest data only (transient staging table).

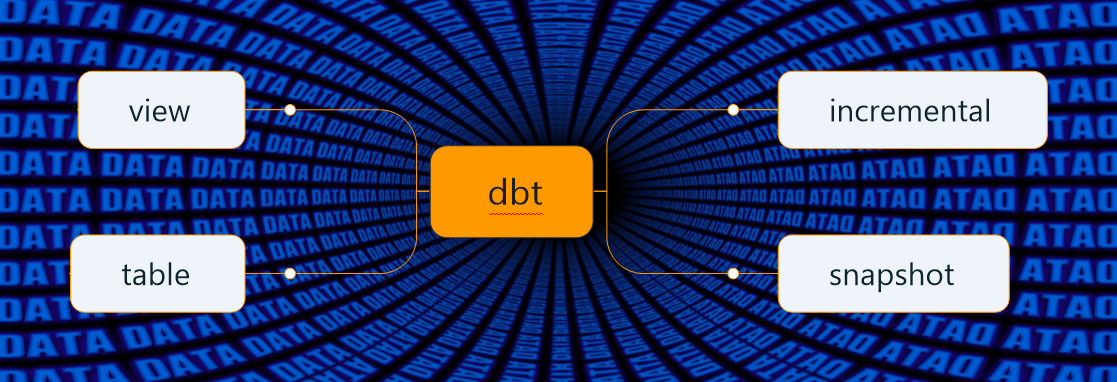

We will create four target tables for each of the staging tables with different materializations:

- view materialization

- table materialization

- incremental materialization

- snapshot materialization

Understanding the differences between the four alternatives in combination with a persistent or transient staging table is essential.

Database setup

There are two staging tables. The corresponding script is 00staging/01_create_staging.sql.

create schema abu; create table abu.stg_customer_prs (customerid integer, customername varchar(100), insertedat timestamp); create table abu.stg_customer_trs (customerid integer, customername varchar(100), insertedat timestamp);

insert into abu.stg_customer_prs values (1, 'Cust1', to_date('01.02.2020', 'DD.MM.YYYY'));

insert into abu.stg_customer_prs values (2, 'Cust2', to_date('01.02.2020', 'DD.MM.YYYY'));

insert into abu.stg_customer_trs values (1, 'Cust1', to_date('01.02.2020', 'DD.MM.YYYY'));

insert into abu.stg_customer_trs values (2, 'Cust2', to_date('01.02.2020', 'DD.MM.YYYY'));insert into abu.stg_customer_prs values (1, 'Cust1_change', to_date('02.02.2020', 'DD.MM.YYYY'));

insert into abu.stg_customer_prs values (3, 'Cust3', to_date('02.02.2020', 'DD.MM.YYYY'));

truncate table abu.stg_customer_trs;

insert into abu.abu.stg_customer_trs values (1, 'Cust1_change', to_date('02.02.2020', 'DD.MM.YYYY'));

insert into abu.abu.stg_customer_trs values (3, 'Cust3', to_date('02.02.2020', 'DD.MM.YYYY'));dbt setup: models and snapshots

The file sources.yml contains the names of the staging tables.

version: 2

sources:

- name: abu

tables:

- name: abu.stg_customer_prs

- name: abu.stg_customer_trs{{

config(

materialized='view'

)

}}

with w_cust as (

select *

from {{ source('abu', 'stg_customer_prs') }}

)

select c.customerid

, c.customername

, c.insertedat

from w_cust c{{

config(

materialized='table'

)

}}

with w_cust as (

select *

from {{ source('abu', 'stg_customer_prs') }}

)

select c.customerid

, c.customername

, c.insertedat

from w_cust c{{

config(

materialized='incremental'

)

}}

with w_cust as (

select *

from {{ source(‘abu’, ‘stg_customer_prs’) }}

— filter for an incremental run to get new data

{% if is_incremental() %}

where insertedat > (select max(insertedat) from {{ this }})

{% endif %}

)

select c.customerid

, c.customername

, c.insertedat

from w_cust c

{{

config(

materialized='incremental'

)

}}

with w_cust as (

select *

from {{ source('abu', 'stg_customer_prs') }}

-- filter for an incremental run to get new data

{% if is_incremental() %}

-- no filter for incremental/delta load. Filter just for full/initial load

{% endif %}

)

select c.customerid

, c.customername

, c.insertedat

from w_cust c{% snapshot customer_prs_snapshot %}

{{

config(

target_schema=’abu’,

unique_key=’customerid’,

strategy=’timestamp’,

updated_at=’insertedat’,

)

}}

with w_cust as (

select *

from {{ source(‘abu’, ‘stg_customer_prs’) }}

)

select c.customerid

, c.customername

, c.insertedat

from w_cust c

{% endsnapshot %}

Data flow

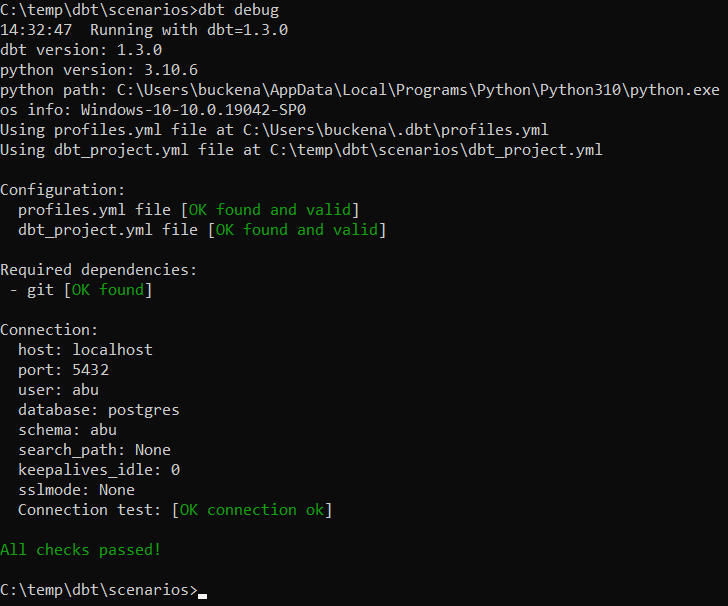

The article goes through the following data flow steps:

- Step 1: Execute in the database: 00staging/01_create_staging.sql

- Step 2: Execute in the database: 00staging/02_initial_load.sql

- Step 3: Run the integration processes with dbt run and dbt snapshot. Now check data in the target tables after the full load.

- Step 4: Execute in the database: 00staging/03_delta_load.sql

- Step 5: Run the integration processes with dbt run and dbt snapshot. Now check data in the target tables after delta load with persistent and transient staging tables.

Data Flow step 1 and 2: Table creation and initial load

The following commands create the staging tables in the schema abu and load the data (initial load).

C:\temp\dbt\scenarios>psql -f 00staging\01_create_staging.sql -U abu -d postgres -p 5432 Passwort für Benutzer abu: CREATE SCHEMA CREATE TABLE CREATE TABLE C:\temp\dbt\scenarios>psql -f 00staging\02_initial_load.sql -U abu -d postgres -p 5432 Passwort für Benutzer abu: INSERT 0 1 INSERT 0 1 INSERT 0 1 INSERT 0 1

postgres=# select * from abu.stg_customer_prs; customerid | customername | insertedat ------------+--------------+--------------------- 1 | Cust1 | 2020-02-01 00:00:00 2 | Cust2 | 2020-02-01 00:00:00 (2 Zeilen) postgres=# select * from abu.stg_customer_trs; customerid | customername | insertedat ------------+--------------+--------------------- 1 | Cust1 | 2020-02-01 00:00:00 2 | Cust2 | 2020-02-01 00:00:00 (2 Zeilen)

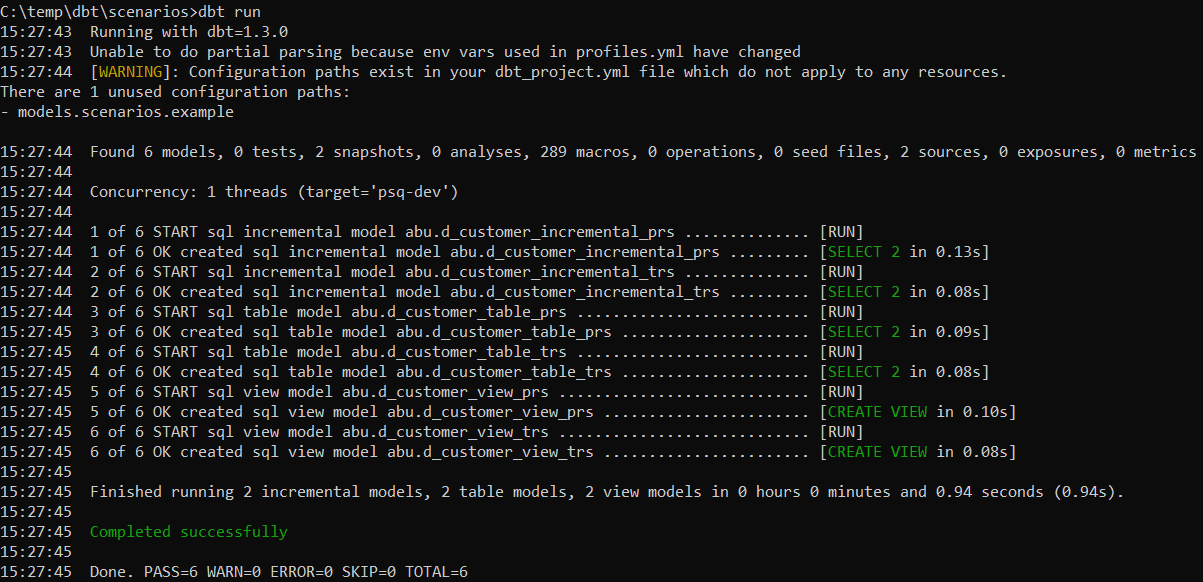

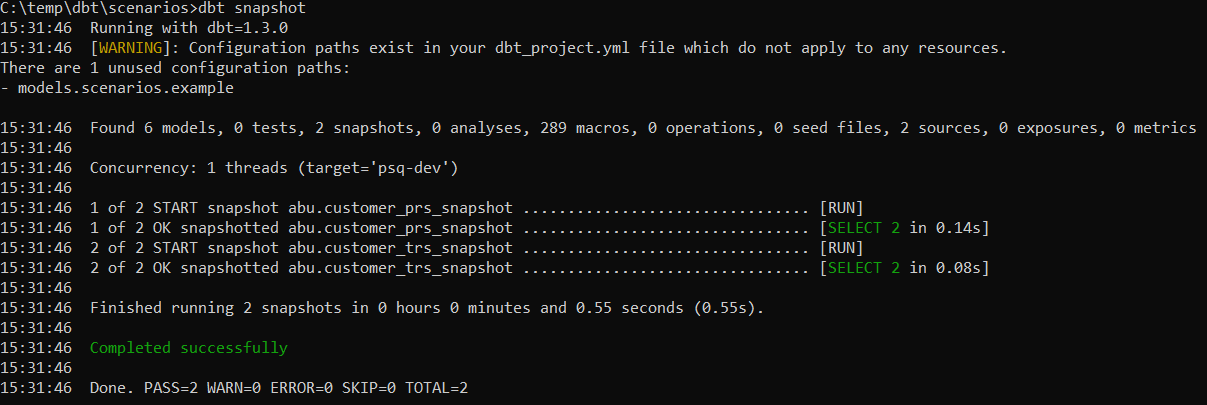

Data Flow step 3: Run the integration processes for initial load

And now let’s run the data loads with “dbt run” and “dbt snapshot”.

postgres=# select * from abu.d_customer_view_prs; customerid | customername | insertedat ------------+--------------+--------------------- 1 | Cust1 | 2020-02-01 00:00:00 2 | Cust2 | 2020-02-01 00:00:00 (2 Zeilen) postgres=# select * from abu.d_customer_view_trs; customerid | customername | insertedat ------------+--------------+--------------------- 1 | Cust1 | 2020-02-01 00:00:00 2 | Cust2 | 2020-02-01 00:00:00 (2 Zeilen) postgres=# select * from abu.d_customer_table_prs; customerid | customername | insertedat ------------+--------------+--------------------- 1 | Cust1 | 2020-02-01 00:00:00 2 | Cust2 | 2020-02-01 00:00:00 (2 Zeilen) postgres=# select * from abu.d_customer_table_trs; customerid | customername | insertedat ------------+--------------+--------------------- 1 | Cust1 | 2020-02-01 00:00:00 2 | Cust2 | 2020-02-01 00:00:00 (2 Zeilen) postgres=# select * from abu.d_customer_incremental_prs; customerid | customername | insertedat ------------+--------------+--------------------- 1 | Cust1 | 2020-02-01 00:00:00 2 | Cust2 | 2020-02-01 00:00:00 (2 Zeilen) postgres=# select * from abu.d_customer_incremental_trs; customerid | customername | insertedat ------------+--------------+--------------------- 1 | Cust1 | 2020-02-01 00:00:00 2 | Cust2 | 2020-02-01 00:00:00 (2 Zeilen) postgres=# select * from abu.d_customer_snapshot_prs; customerid | customername | insertedat | dbt_scd_id | dbt_updated_at | dbt_valid_from | dbt_valid_to ------------+--------------+---------------------+----------------------------------+---------------------+---------------------+-------------- 1 | Cust1 | 2020-02-01 00:00:00 | 15121fd6f50ff72062297f4fdb392256 | 2020-02-01 00:00:00 | 2020-02-01 00:00:00 | 2 | Cust2 | 2020-02-01 00:00:00 | e951a41c996a3a62847b2c21e5ac7efa | 2020-02-01 00:00:00 | 2020-02-01 00:00:00 | (2 Zeilen) postgres=# select * from abu.d_customer_snapshot_trs; customerid | customername | insertedat | dbt_scd_id | dbt_updated_at | dbt_valid_from | dbt_valid_to ------------+--------------+---------------------+----------------------------------+---------------------+---------------------+-------------- 1 | Cust1 | 2020-02-01 00:00:00 | 15121fd6f50ff72062297f4fdb392256 | 2020-02-01 00:00:00 | 2020-02-01 00:00:00 | 2 | Cust2 | 2020-02-01 00:00:00 | e951a41c996a3a62847b2c21e5ac7efa | 2020-02-01 00:00:00 | 2020-02-01 00:00:00 | (2 Zeilen)

Data Flow step 4: Delta load

The delta load will add new data into the staging tables:

- The staging table stg_customer_prs will get two new rows.

- The staging table stg_customer_trs will be truncated and gets two new rows.

C:\temp\dbt\scenarios>psql -f 00staging\03_delta_load.sql -U abu -d postgres -p 5432 Passwort für Benutzer abu: INSERT 0 1 INSERT 0 1 TRUNCATE TABLE INSERT 0 1 INSERT 0 1

postgres=# select * from abu.stg_customer_prs; customerid | customername | insertedat ------------+--------------+--------------------- 1 | Cust1 | 2020-02-01 00:00:00 2 | Cust2 | 2020-02-01 00:00:00 1 | Cust1_change | 2020-02-02 00:00:00 3 | Cust3 | 2020-02-02 00:00:00 (4 Zeilen) postgres=# select * from abu.stg_customer_trs; customerid | customername | insertedat ------------+--------------+--------------------- 1 | Cust1_change | 2020-02-02 00:00:00 3 | Cust3 | 2020-02-02 00:00:00 (2 Zeilen)

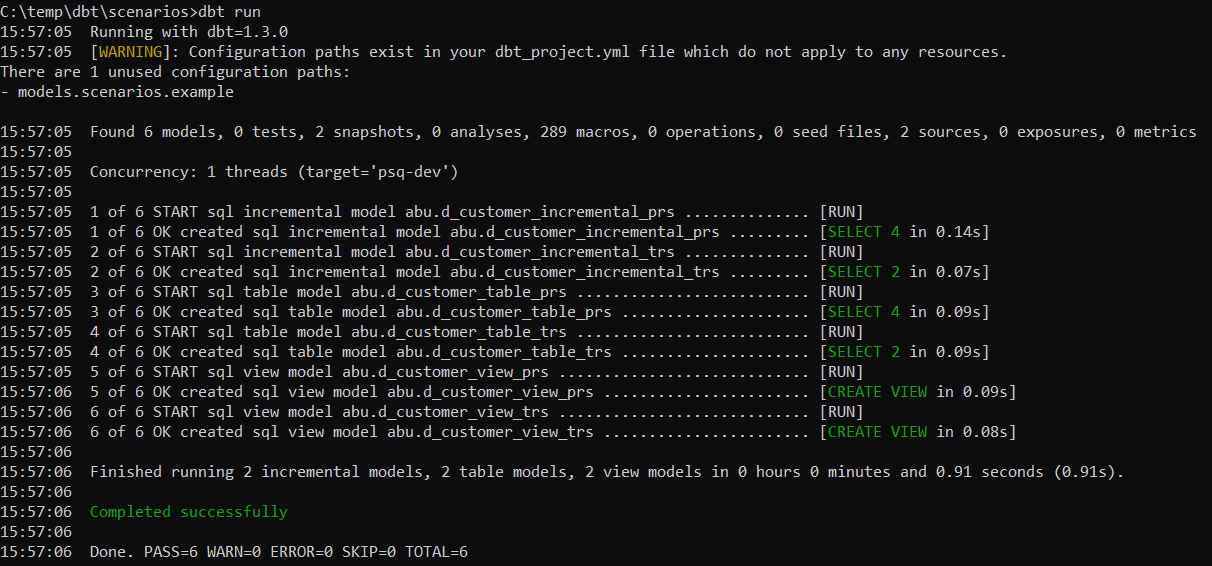

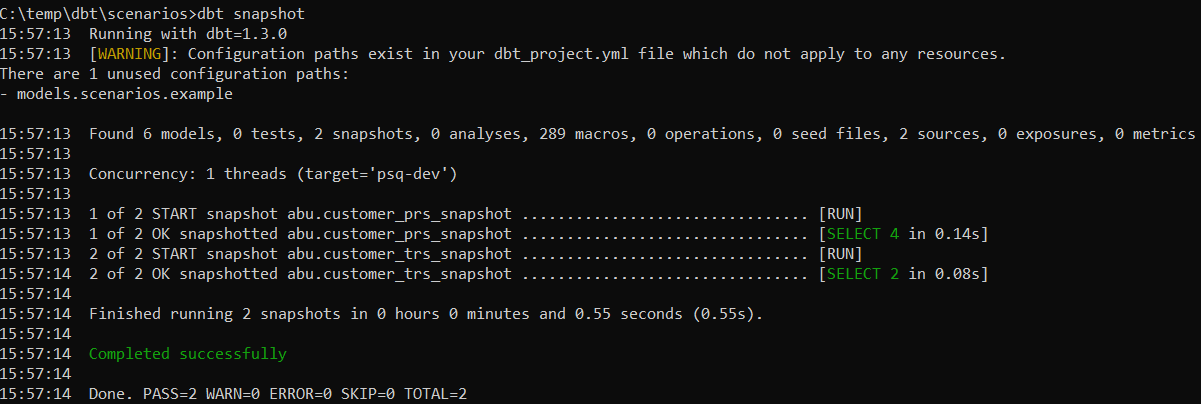

Data Flow step 5: Run the integration processes for delta load

And now let’s run again the data loads with “dbt run” and “dbt snapshot”.

postgres=# select * from abu.d_customer_view_prs; customerid | customername | insertedat ------------+--------------+--------------------- 1 | Cust1 | 2020-02-01 00:00:00 2 | Cust2 | 2020-02-01 00:00:00 1 | Cust1_change | 2020-02-02 00:00:00 3 | Cust3 | 2020-02-02 00:00:00 (4 Zeilen)

postgres=# select * from abu.d_customer_view_trs;

customerid | customername | insertedat

————+————–+———————

1 | Cust1_change | 2020-02-02 00:00:00

3 | Cust3 | 2020-02-02 00:00:00

(2 Zeilen)

postgres=# select * from abu.d_customer_table_prs;

customerid | customername | insertedat

————+————–+———————

1 | Cust1 | 2020-02-01 00:00:00

2 | Cust2 | 2020-02-01 00:00:00

1 | Cust1_change | 2020-02-02 00:00:00

3 | Cust3 | 2020-02-02 00:00:00

(4 Zeilen)

postgres=# select * from abu.d_customer_table_trs;

customerid | customername | insertedat

————+————–+———————

1 | Cust1_change | 2020-02-02 00:00:00

3 | Cust3 | 2020-02-02 00:00:00

(2 Zeilen)

postgres=# select * from abu.d_customer_incremental_prs;

customerid | customername | insertedat

————+————–+———————

1 | Cust1 | 2020-02-01 00:00:00

2 | Cust2 | 2020-02-01 00:00:00

1 | Cust1_change | 2020-02-02 00:00:00

3 | Cust3 | 2020-02-02 00:00:00

(4 Zeilen)

postgres=# select * from abu.d_customer_incremental_trs;

customerid | customername | insertedat

————+————–+———————

1 | Cust1 | 2020-02-01 00:00:00

2 | Cust2 | 2020-02-01 00:00:00

1 | Cust1_change | 2020-02-02 00:00:00

3 | Cust3 | 2020-02-02 00:00:00

(4 Zeilen)

postgres=# select * from abu.d_customer_snapshot_prs;

customerid | customername | insertedat | dbt_scd_id | dbt_updated_at | dbt_valid_from | dbt_valid_to

————+————–+———————+———————————-+———————+———————+———————

2 | Cust2 | 2020-02-01 00:00:00 | e951a41c996a3a62847b2c21e5ac7efa | 2020-02-01 00:00:00 | 2020-02-01 00:00:00 |

1 | Cust1 | 2020-02-01 00:00:00 | 15121fd6f50ff72062297f4fdb392256 | 2020-02-01 00:00:00 | 2020-02-01 00:00:00 | 2020-02-02 00:00:00

1 | Cust1_change | 2020-02-02 00:00:00 | cee665e87a7d891c22469e4417cf9b58 | 2020-02-02 00:00:00 | 2020-02-02 00:00:00 |

3 | Cust3 | 2020-02-02 00:00:00 | b60f6d826f134ce61df7bee89eb1903a | 2020-02-02 00:00:00 | 2020-02-02 00:00:00 |

(4 Zeilen)

postgres=# select * from abu.d_customer_snapshot_trs;

customerid | customername | insertedat | dbt_scd_id | dbt_updated_at | dbt_valid_from | dbt_valid_to

————+————–+———————+———————————-+———————+———————+———————

2 | Cust2 | 2020-02-01 00:00:00 | e951a41c996a3a62847b2c21e5ac7efa | 2020-02-01 00:00:00 | 2020-02-01 00:00:00 |

1 | Cust1 | 2020-02-01 00:00:00 | 15121fd6f50ff72062297f4fdb392256 | 2020-02-01 00:00:00 | 2020-02-01 00:00:00 | 2020-02-02 00:00:00

1 | Cust1_change | 2020-02-02 00:00:00 | cee665e87a7d891c22469e4417cf9b58 | 2020-02-02 00:00:00 | 2020-02-02 00:00:00 |

3 | Cust3 | 2020-02-02 00:00:00 | b60f6d826f134ce61df7bee89eb1903a | 2020-02-02 00:00:00 | 2020-02-02 00:00:00 |

(4 Zeilen)

View materialization

The view materialization shows a difference between persistent and transient scenarios:

- The result table d_customer_view_prs contains all rows from initial and delta load.

- The result table d_customer_view_trs contains the rows from the delta load only.

dbt creates a view to the staging table and does not store data in the dimension. This approach is very flexible but may be slow if huge data sets must be queried.

postgres=# \d+ abu.d_customer_view_prs Sicht abu.d_customer_view_prs Spalte | Typ | Sortierfolge | NULL erlaubt? | Vorgabewert | Speicherung | Beschreibung --------------+-----------------------------+--------------+---------------+-------------+-------------+-------------- customerid | integer | | | | plain | customername | character varying(100) | | | | extended | insertedat | timestamp without time zone | | | | plain | Sichtdefinition: WITH w_cust AS ( SELECT stg_customer_prs.customerid, stg_customer_prs.customername, stg_customer_prs.insertedat FROM stg_customer_prs ) SELECT c.customerid, c.customername, c.insertedat FROM w_cust c;

Remark: abu.d_customer_view_trs will be the same.

Table materialization

The table materialization has the same outcome as the view materialization and shows a difference between persistent and transient scenarios:

- The result table d_customer_table_prs contains all rows from initial and delta load.

- The result table d_customer_table_trs contains the rows from the delta load only.

dbt creates a new dimension table with every dbt run. Data is stored in the dimension compared to the view materialization. Therefore, queries on the dimension will be faster, but data copy will take time, too.

postgres=# \d+ abu.d_customer_table_prs Tabelle abu.d_customer_table_prs Spalte | Typ | Sortierfolge | NULL erlaubt? | Vorgabewert | Speicherung | Kompression | Statistikziel | Beschreibung --------------+-----------------------------+--------------+---------------+-------------+-------------+-------------+---------------+-------------- customerid | integer | | | | plain | | | customername | character varying(100) | | | | extended | | | insertedat | timestamp without time zone | | | | plain | | | Zugriffsmethode: heap Remark: abu.d_customer_table_trs will be the same.

Incremental materialization

The incremental materialization leads to the same data. Note that the module configuration is different (see above).

dbt creates a new table with the first run. Subsequent runs will refresh the table without recreating it compared to table materialization.

postgres=# \d+ abu.d_customer_incremental_prs Tabelle abu.d_customer_incremental_prs Spalte | Typ | Sortierfolge | NULL erlaubt? | Vorgabewert | Speicherung | Kompression | Statistikziel | Beschreibung --------------+-----------------------------+--------------+---------------+-------------+-------------+-------------+---------------+-------------- customerid | integer | | | | plain | | | customername | character varying(100) | | | | extended | | | insertedat | timestamp without time zone | | | | plain | | | Zugriffsmethode: heap Remark: abu.d_customer_incremental_trs will be the same.

Snapshot materialization

The snapshot materialization leads to the same data. The snapshot materialization is the basis for building slowly changing dimensions type 2.

dbt creates a new table with the first run. Subsequent runs will refresh the table without recreating it compared to table materialization. dbt also updates valid_from and valid_to dates for the defined unique key.

postgres=# \d+ abu.d_customer_snapshot_prs Tabelle abu.d_customer_snapshot_prs Spalte | Typ | Sortierfolge | NULL erlaubt? | Vorgabewert | Speicherung | Kompression | Statistikziel | Beschreibung ----------------+-----------------------------+--------------+---------------+-------------+-------------+-------------+---------------+-------------- customerid | integer | | | | plain | | | customername | character varying(100) | | | | extended | | | insertedat | timestamp without time zone | | | | plain | | | dbt_scd_id | text | | | | extended | | | dbt_updated_at | timestamp without time zone | | | | plain | | | dbt_valid_from | timestamp without time zone | | | | plain | | | dbt_valid_to | timestamp without time zone | | | | plain | | | Zugriffsmethode: heap Remark: abu.d_customer_snapshot_trs will be the same.

Concluding remarks

The scenarios show that it’s essential to know how the staging (source table) is refreshed:

- view and table materialization produce different results

- incremental can produce the same result with a properly configured model file

- snapshot can produce the same result

The scenarios containing a table also show that a primary key is never created. While it can make sense not to use primary keys for performance reasons, a developer needs to choose whether a primary key is necessary. Therefore, the tool has to offer options like primary key, foreign key, partitioning, etc. Hooks are available as a workaround the create additional objects.

dbt, as a self-service engineering tool, helps Data Scientists, Data Analysts, or other Data Engineers to implement data flows. At the same time, Data Engineers may require more easy control on how to create the target objects physically (Primary keys, partitioning, etc).

The source code for the scenarios is available on my dbt github repository.