His central idea is to use “Generic SQL Programming”: SQL programs generate dynamic SQL for building a Data Warehouse. He defined generic algorithms in the general book and the implementation in the DB specific books.

His generator approach is based on a DWH reference architecture containing

- Staging region for short-term staging data from the source systems

- Integration region for value-adding data transformations

- Storing region including

- Central repository area in 3NF for long-term storage of the integrated detail data

- Materialized View area in a dimensional model (Star schema) for efficient data access or alternatively business views directly on data in the Central repository area if the views are fast enough

The generator is completely based

on metadata tables within the DWH database. As a prerequisite, it is assumed that the project specific DWH tables for staging, integration, or storing region are already created in the DWH. Stored Procedures are defined in the book and are available to be configured with project-specific tables, columns, etc.

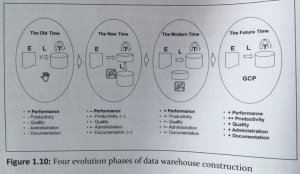

He regards such code generators (“GCP” = genericity-centralising programs) as the future ELT paradigm as such generators store the complete metadata within the database, are independent of tool vendors and are open for project-specific adjustments. Some of his design principles are

- capture all table-specific information in metadata tables

- code static domain-generic knowledge in Metadata-driven Generic Operators and dynamic domain-generic knowledge in user-define metadata tables

A metadata-driven Generic Operators is for example “Filter&Cleaner”. The operator is specified by metadata about filtering and cleaning rows from an input table.

The general book contains and explains the theory. The DB specific books contain tons of source code, e.g. Stored Procedures for Staging Loads, or Fact Table Loads and helper SPs like TransformerGenerator or JoinGenerator. The books provide many ideas that can be used as an inspiration for writing or enriching own code generators. The author prefers to transform the data before storing it in 3NF in the central repository area. That’s a classical (Inmon) view on Data Warehousing. Data Vault would push the transformation downstream and store the data in a Data Vault model.