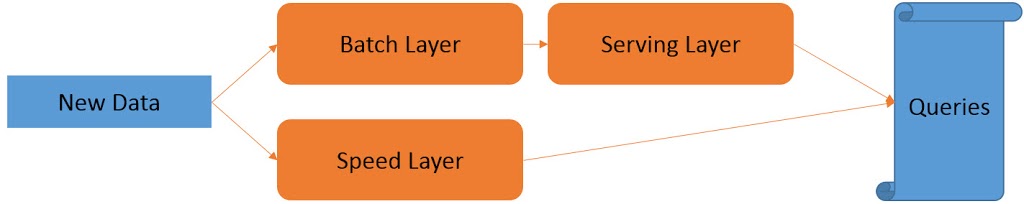

The Lambda Architecture got known after Nathan Marz’ and James Warren’s book about Big Data. The authors describe a data processing architecture for batch and real-time data flows at the same time. Fault-tolerance and the balance of latency vs throughput are main goals of the architecture. They distinguish three layers:

- Batch layer for storing raw data and providing the possibility to recompute all information that is derived from raw data

- Speed layer serves data real-time by providing incremental refreshes

- Serving layer consists of “logical views” to results from the batch (older and historical data) and speed layer (latest/hot data)

A lot has been written about the inherent complexity of the Lambda architecture. The batch and speed layers require different code bases that must be maintained and kept in sync. These different code bases must produce the same result for both layers. Jay Kreps proposed the KAPPA architecture as an alternative with less complexity and avoidance of the duplicated code base.

Nathan Marz/James Warren did not only focus on the three different layers – batch, speed, and serving. They also focused on handling data as an asset and giving data a structure. IMO, this topic is the most important part of the book regarding all the recent discussions about Data Lakes, Data Reservoirs, schema-on-read, etc.

The authors lists three key properties of data if building a system capable of answering queries of any kind:

- Rawness

Store the data as it is. Don’t make any aggregations or transformations before storing it.

- Immutability

Don’t update or delete data, just add more.

- Perpetuity

A piece of data, once true, must always be true.

They recommend storing incoming data as a master dataset in the batch layer containing timestamped facts. They do not recommend other structures like XML or JSON. „Many developers go down the path of writing their raw data in a schemaless format like JSON. This is appealing because of how easy it is to get started, but this approach quickly leads to problems. Whether due to bugs or misunderstandings between different developers, data corruption inevitably occurs“ (see page 103, Nathan Marz, „Big Data: Principles and best practices of scalable realtime data systems”, Manning Publications).

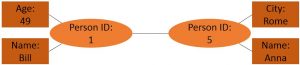

Nathan Marz/James Warren describe the master dataset as the source of truth in the system. Data is stored non-redundant. And therefore the master dataset must be safeguarded from corruption. The fact-based model should be implemented by using a graph-like schema. The graph schema consists of nodes and edges. Properties describe the content. The authors use an enforceable schema instead of JSON. JSON would provide schema-on-read benefits like simplicity and flexibility. But JSON would also allow st any nonsense. Additionally, the master dataset must support data evolution, because a company’s data types will change considerably over the years. JSON could easily store the changes but nobody would be able to interpret the data (structure) from several years ago.

Nathan Marz/James Warren describe the master dataset as the source of truth in the system. Data is stored non-redundant. And therefore the master dataset must be safeguarded from corruption. The fact-based model should be implemented by using a graph-like schema. The graph schema consists of nodes and edges. Properties describe the content. The authors use an enforceable schema instead of JSON. JSON would provide schema-on-read benefits like simplicity and flexibility. But JSON would also allow st any nonsense. Additionally, the master dataset must support data evolution, because a company’s data types will change considerably over the years. JSON could easily store the changes but nobody would be able to interpret the data (structure) from several years ago.There are quite some similarities to Data Vault. The above mentioned three key properties are also a fundamental part of Data Vault. The architecture is completely different as Data Vault architecture does not have /need two parallel layers (batch and speed). Because data is not transformed on the way to the Core Layer (Data Lake), Data Vault presumes that data can arrive real-time and can be processed in real-time. Data Vault much especially focuses on the integration of several source systems. Business/Natural Keys are a key concept to achieve the integration. Data Vault addresses the principle of the single version of facts which means storing 100% of data all of the time.

Providing data to the end user’s tools is normally done by physically storing results in dimensional data marts (Data Reservoirs) or as virtual views on Data Vault tables if the hardware is really fast enough. The Lambda architecture has to combine data from the batch and speed layer. Nathan Marz/James Warren provide a detailed description and summarize that there is currently a lack of tooling. Though they introduce ElephantDB as an alternative to Cassandra or Base, the lack of tooling for the Serving layer is a huge downside of the Lambda architecture.

Regardless which modeling approach is used – Graph schema, Data Vault, Rogier Werschull’s PS-3C or any other – Data modeling is still a necessity for Data Lakes or whatever it is called. Dumping data into a Data Lake without structure sounds appealing. Data modeling must be done at least at the logical level. Otherwise, the technical debt of blindly dumping data into the Lake has to be paid afterward.