If you live in Python notebooks all day, it’s only a matter of time until you want proper SQL right next to your code. No extra UI, no switching tools, just plain SQL with output.

This article covers:

-

Why running SQL inside a notebook is useful

-

How to enable it in Google Colab with DuckDB + JupySQL (with a working example)

-

Why JupySQL over ipython-sql

Why running SQL inside a notebook is useful

You could absolutely do everything in plain Python. But combining SQL + notebooks gives you a few nice superpowers:

- Literate analytics

You can mix Markdown, SQL, and Python in one place: explain what you’re doing, show the query, show the result. Perfect for teaching, documentation, and handovers. - Use the right tool for the job

Many people (including analysts) can read and reason about SQL immediately. Complex filters, joins, and aggregations are often more readable in SQL than in nested pandas calls. - Single, simple environment

No need to spin up a separate SQL editor or database UI. With a lightweight database like DuckDB you even get an embedded OLAP database in-process, so everything runs in your notebook (or Colab runtime) without extra infrastructure. - Run SQL, then

.DataFrame()the result into pandas for plotting, machine learning, or anything else you like.

All of this fits nicely with the data.KISS mindset: keep the environment simple, keep the workflow close to where the thinking happens. The following cell gives an impression how it looks like.

How to enable it in Google Colab with DuckDB + JupySQL

Let’s walk through the minimal setup for Google Colab using:

-

DuckDB as the SQL engine

-

JupySQL for the

%sql/%%sqlmagics -

The famous penguins dataset as sample data

The example below is based on the official JupySQL + DuckDB integration docs and tutorials.

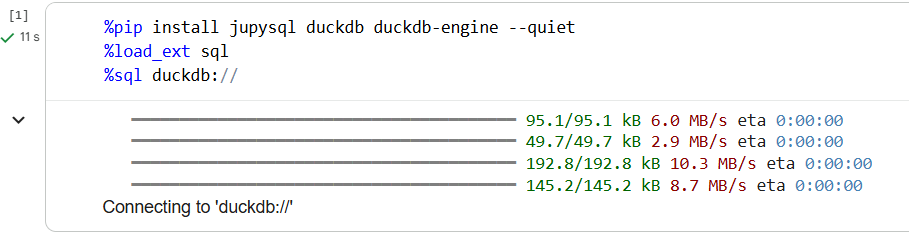

Install and load the extension

In a Colab code cell:

What this does:

-

%pip install ...– installs JupySQL, DuckDB, and the DuckDB SQLAlchemy driver in the current runtime -

%load_ext sql– activates the JupySQL IPython extension -

%sql duckdb://– opens a connection to an in-memory DuckDB database using a standard SQLAlchemy URL

If everything is fine, you’ll see something like:

-

%sql — line magic (single-line queries)

-

%%sql — cell magic (multi-line queries)

Load some data (penguins.csv)

Next, download a CSV and store it locally in the Colab filesystem:

Create a DuckDB table from CSV

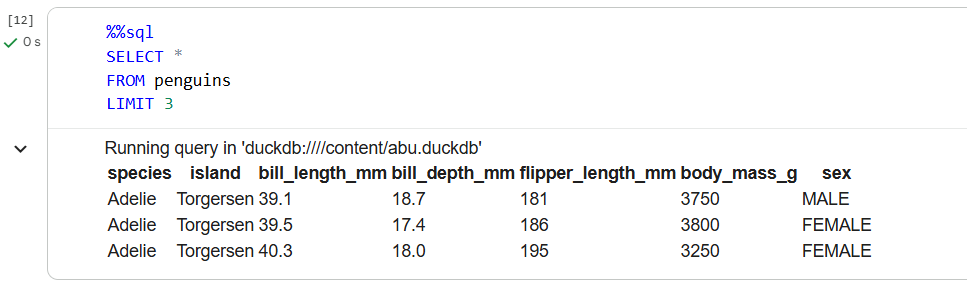

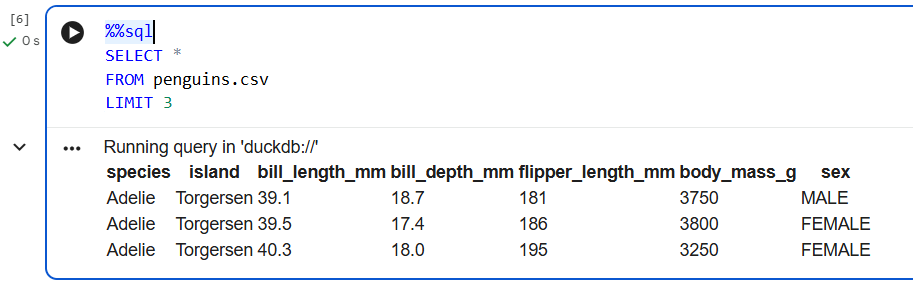

Now we stay inside SQL:

%%sql

CREATE TABLE penguins AS

SELECT * FROM penguins.csv

DuckDB can query CSVs directly, and JupySQL passes this statement to DuckDB. The penguins.csv reference is handled by DuckDB’s built-in CSV reader.

You can take a quick peek:

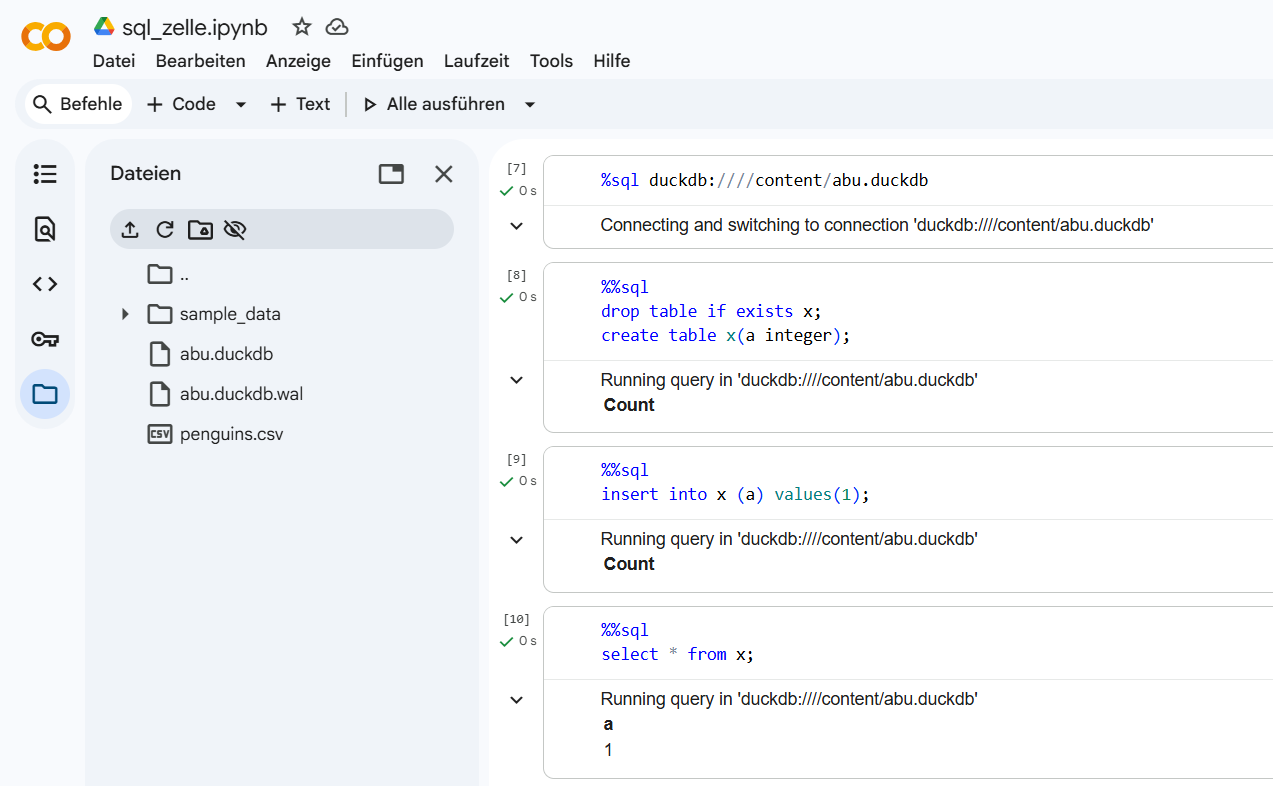

Persisting DuckDB to a file

In Colab, your in-memory DB is gone as soon as the runtime dies. If you want something permanent, connect to a file-backed database with /content as root directory in Colab:

Reconnecting later with the same duckdb:////content/abu.duckdb URL lets you pick up where you left off, as long as the file still exists in /content.

Why JupySQL instead of ipython-sql?

Historically, ipython-sql was the standard %sql extension in Jupyter. JupySQL actually started as a fork of ipython-sql and is now the actively maintained project, with new features like plotting, better DuckDB integration, and compatibility with modern environments.

So why JupySQL, especially in Colab?

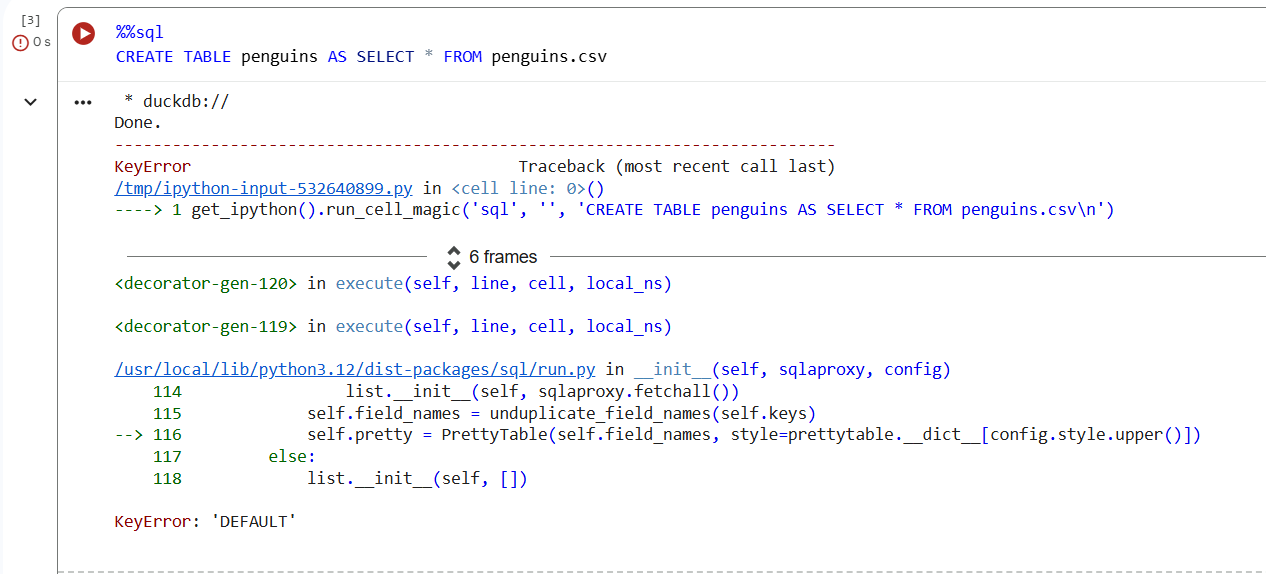

In Colab, using ipython-sql together with %%sql / % sql magic can lead to a rather annoying error when you try to run a SQL cell, even though the connection itself works:

The error occurs deep inside the table-rendering logic (PrettyTable style handling). The stack trace itself isn’t helpful, and debugging it inside a throwaway Colab environment is not exactly fun. Getting the error solved is quite a pain.

With the same setup, JupySQL just… works. The exact same SQL statements run fine, and results are rendered without that KeyError: 'DEFAULT' surprise.

JupySQL is the maintained path forward

From the ipython-sql GitHub README:

IPython-SQL’s functionality and maintenance have been eclipsed by JupySQL, a fork maintained and developed by the Ploomber team. Future work will be directed into JupySQL.

That means:

-

Bugfixes and new features land in JupySQL, not ipython-sql

-

The DuckDB docs and examples now show JupySQL as the recommended way to work with DuckDB in notebooks

-

The broader ecosystem (like PRQL’s Jupyter integration or other tools) also builds on JupySQL

From a “Keep It Simple” perspective, standardizing on the tool that’s actively maintained is an easy win.

Trackbacks/Pingbacks